AMD's chief exec Lisa Su has predicted the chip designer's Instinct accelerators will drive tens of billions of dollars in annual revenue in coming years, despite DeepSeek-inspired speculation that next-gen AI models may not need the same level of compute infrastructure used to produce such tools today. "Relative to DeepSeek, we think that innovation on the models and the algorithms is good for AI adoption," Su told analysts on Tuesday's Q4 earnings call, referring to the surprisingly capable made-in-China LLM family that was built on compute infrastructure so efficient – with fewer GPUs than one might expect – it has investors wondering if cutting-edge AI truly needs the billions in capex Silicon Valley demands. Or in other words, do organizations really need to spend so much on the likes of AMD, buying its AI accelerators and swelling its revenues, if DeepSeek shows you can do more with less? Isn't this a warning sign of a market correction? "The fact that there are new ways to bring about training and inference capabilities with less infrastructure is actually good," said Su, "because it allows us to continue to deploy AI compute in the broader application space and [with] more adoption.

" And speaking of adoption, Su expects Instinct revenues to increase significantly compared to the $5 billion-plus they brought in during the 2024 fiscal year. The CEO offered only that enthusiastic outlook and resisted committing to a revenue number. Su did, however, suggest that AMD's strongest growth would come in the second half of FY 2025 (which ends on December 28 and therefore all-but overlaps the calendar year) and be driven by shipments of its next-gen MI355X accelerators.

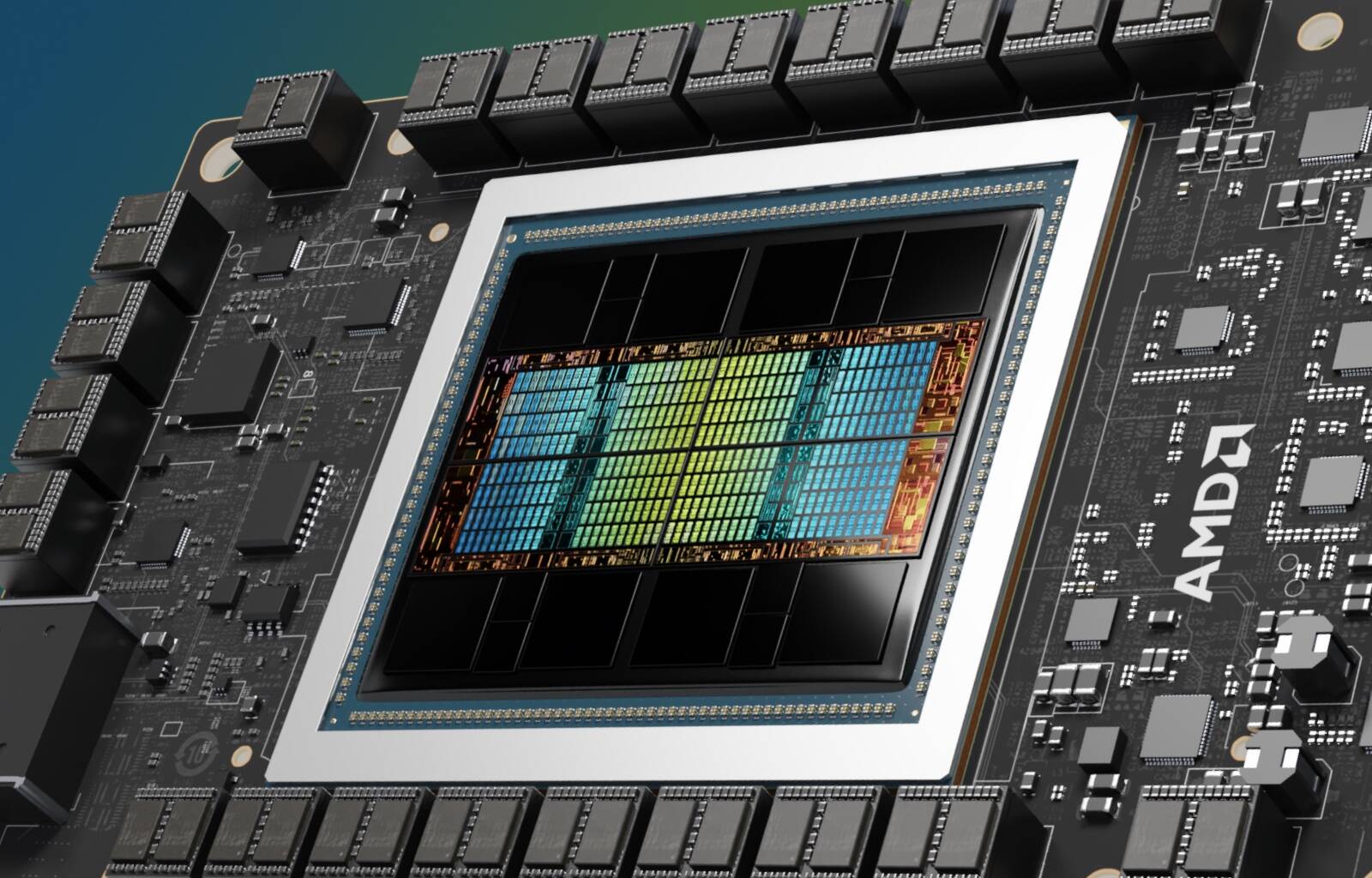

Those chips were initially expected to ship toward the end of the second half. However, given the performance of early silicon and customer demand, Su said the chip designer now expects to ramp production toward the middle of the year. First teased during AMD's Advancing AI event last fall, the next-gen GPU will use the CDNA 4 architecture and boast dense floating point performance roughly on par with Nvidia's B200 accelerators while delivering 50 percent more memory at 288GB.

Su also took the opportunity to tease AMD's next-gen MI400-series accelerators, which will apparently be offered in a rack-scale architecture. "The CDNA-Next architecture takes another major leap, enabling powerful rack-scale solutions that tightly integrate networking, CPU and GPU capabilities at the silicon level to support Instinct solutions at data center scale," she said. Su didn't go into much detail as to what these rack-scale systems will look like, but if we had to guess they'll be somewhat reminiscent of Nvidia's 120 kilowatt NVL72 systems we looked at last spring, only using Ultra Ethernet and UA Link rather than InfiniBand and NVLInk.

AMD isn't the only one that's jumped on the rack scale bandwagon. Last week, Intel revealed its Jaguar Shores GPUs will also embrace the form factor — assuming they ever make it out of the lab . On Tuesday's earnings call, Su also downplayed the threat of custom AI ASICs pose to AMD's GPU business.

Custom silicon for AI workloads has become increasingly common among hyperscaler and cloud providers. In particular, Google and Amazon have now deployed large quantities of their respective tensor processing units (TPUs) and Trainium accelerators to support internal and external model development. Late last year, Amazon revealed plans to deploy more than 100,000 Trainium2 accelerators under Project Rainier for use by AI model builder Anthropic.

Broadcom has told shareholders it sees a pipeline for millions of similar devices. Despite this, Su doesn't expect dedicated AI ASICs to replace GPUs any time soon. "I have always been a believer in you need the right compute for the right workload," she said.

"Given the diversity of workloads, large models, medium models, small models, inference...

broad foundation models or very-specific models, you're going to need all types of compute and that includes CPUs, GPUs, ASICs, and FPGAs." In total, Su expects these accelerators to eventually contribute to a total addressable market (TAM) of more than $500 billion. However, she notes that the AI algorithms behind the generative AI boom are still evolving.

"My belief is, given how much change there is still going on in AI algorithms, that ASICs will still be the smaller part of that TAM [total addressable market]." How this mix will actually shake out remains to be seen, but it's clear from AMD's Q4 earnings the company has a lot riding on its Instinct accelerators, which accounted for roughly a fifth of its annual revenues. Looking back over the past four quarters, AMD profits nearly doubled during the 2024 fiscal year, surging 92 percent to $1.

6 billion. Revenues, for the year, meanwhile were up 14 percent to $25.8 billion.

Of that, $482 million in net income and $7.75 billion in revenues were realized during the fourth quarter. Unsurprisingly, AMD's datacenter division again accounted for the lion's share of the revenues, at $3.

9 billion for the quarter, up 69 percent year on year. For the full year, datacenter sales totaled $12.6 billion, up 94 percent, of which Instinct GPUs made up $5 billion.

AMD's client computing division won $2.3 billion in revenues in Q4, an increase of 58 percent compared to this time last year. AMD's gains in this arena aren't terribly surprising considering it recently refreshed both its mobile and desktop products.

However, AMD's gaming and embedded divisions continued to struggle in the fourth quarter. Gaming revenues reached $563 million, down 59 percent year over year. Q4 is usually a good season for gaming revenue as high-powered PCs and consoles appear under Christmas trees.

Executives pointed to the advanced age – five whole years! – of Sony’s PlayStation and Microsoft’s Xbox as a reason for poor performance, as console sales decline as they age meaning demand for the custom AMD processors they include diminishes. Su suggested the gaming and embedded segment could rebound in 2025 as AMD targets mainstream PC gamers with its Radeon 9000-series GPUs. AMD's embedded segment, which includes its FPGAs and Adaptive SoC family of products, also declined during the quarter, falling 13 percent year over year to $923 million.

According to Su, market demand for FPGAs improved in the aerospace and defense spaces but remains weak among industrial and communications customers. Looking ahead to the first quarter, AMD is forecasting revenues of $7.1 billion give or take $300 million, an increase of about 30 percent compared to 2024, and a seven percent decline from the prior quarter.

Speaking of which, AMD's share price dropped about nine percent in after-hours trading, largely due to those quarterly datacenter sales not reaching the $4.1 billion Wall Street had anticipated . ®.