Home | News | Meta Launches Llama 4 Series Multimodal Ai With Record Breaking Context Window Meta launches Llama 4 series: Multimodal AI with record-breaking context window LLaMA 4 Scout, equipped with 17 billion active parameters and 16 experts, runs efficiently on a single NVIDIA H100 GPU and introduces an industry-first 10 million token context window. It surpasses competitors like Gemma 3, Gemini 2.0 Flash-Lite, and Mistral 3.

1 in performance. On the other hand, LLaMA 4 Maverick also features 17 billion active parameters but scales up with 128 experts and a total of 400 billion parameters. It outperforms GPT-4o and Gemini 2.

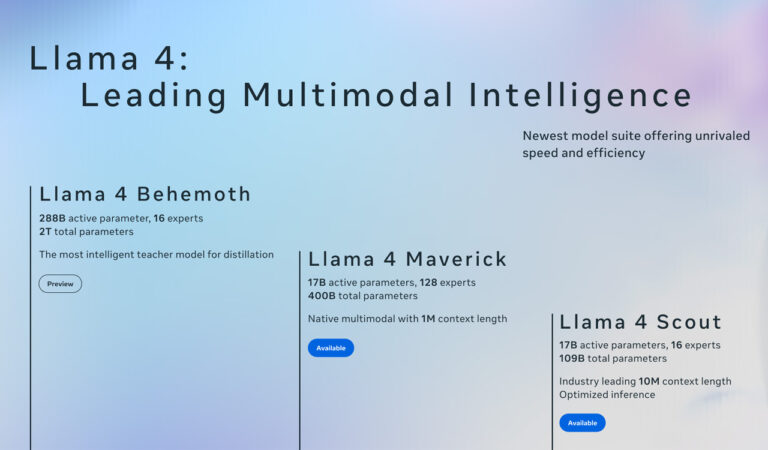

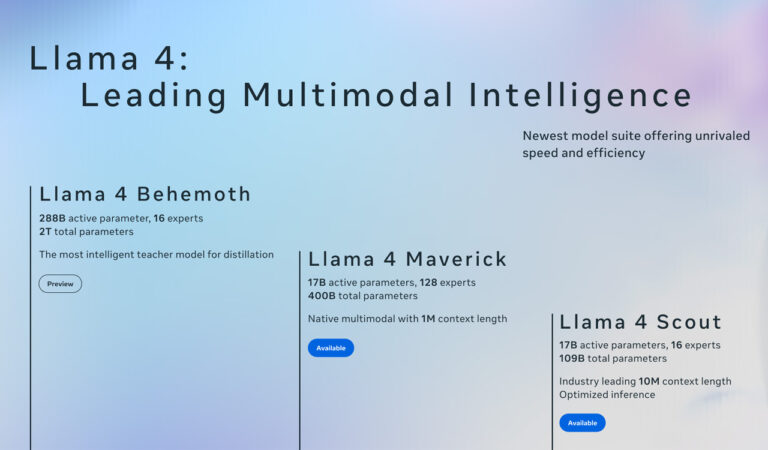

0 Flash in benchmark tests, all while remaining cost-effective By T Ivan Nischal Updated On - 6 April 2025, 11:14 AM Hyderabad: The Llama 4 series has been introduced as an innovative family of open-weight, natively multimodal AI forms. Meta offers a high-performance personalized experience across applications. These include Llama 4 Scout and Llama 4 Maverick.

Both hybrids work through a mixture-of-experts or MoE architecture aimed at providing an efficient training, reasoning, and multimodal capability. Llama 4 Scout can effectively run without even a single NVIDIA H100 GPU when its active parameters are 17 billion with 16 experts. Notably, it has an industry-leading 10 million-token context window.

It also outperforms the likes of Gemma 3, Gemini 2.0 Flash-Lite, and Mistral 3.1.

Meanwhile, Llama 4 Maverick also possesses 17 billion active parameters, however, it boasts 128 experts and a whopping 400 billion total parameters-the most productive when evaluated with peer even in comparison to the very strict standards set by GPT-4o and Gemini 2.0 Flash. Also Read India becomes top market for Meta AI usage: Zuckerberg They were both distilled from Meta’s largest AI in history, Llama 4 Behemoth, which is a model training with active parameters of 288 billion.

Behemoth has surpassed in STEM benchmarks the GPT-4.5, Claude Sonnet 3.7, and Gemini 2.

0 Pro. Advances in the Llama 4 models include the introduction of better vision encoders, extended contexts, and more powerful image-text understanding. Innovations instituted post training include adaptive reinforcement learning and better supervision tactics for improved multimodal and reasoning capabilities.

Meta has also rolled out other safety tools, namely: Llama Guard, Prompt Guard, and CyberSecEval, aimed at ensuring better content moderation and quality assurance in cybersecurity, accompanied by updated bias mitigation strategies. Developers can now get access to Llama 4 Scout and Maverick from llama.com and Hugging Face or even through Meta AI in apps such as WhatsApp and Messenger.

This opens the door much more for open AI advances and encourages the developer, researcher and enterprise community to construct applications that are intelligent, scalable, efficient and responsible in doing so. Follow Us : Tags AI Claude Sonnet 3.7 Gemini 2.

0 Pro GPT-4.5 Related News Immigrants to be let in to work in plants opening up because of tariffs: Trump Ghibli trend gone wrong: Can you spot errors in these Ghibli-style pictures? Samsung launches Galaxy A26 5G in India, its most affordable AI-powered smartphone OpenAI expands ChatGPT with new GPT-4o image generation features.

Technology

Meta launches Llama 4 series: Multimodal AI with record-breaking context window

LLaMA 4 Scout, equipped with 17 billion active parameters and 16 experts, runs efficiently on a single NVIDIA H100 GPU and introduces an industry-first 10 million token context window. It surpasses competitors like Gemma 3, Gemini 2.0 Flash-Lite, and Mistral 3.1 in performance. On the other hand, LLaMA 4 Maverick also features 17 billion active parameters but scales up with 128 experts and a total of 400 billion parameters. It outperforms GPT-4o and Gemini 2.0 Flash in benchmark tests, all while remaining cost-effective