With the arrival of iOS 18 , both of the major mobile platforms now offer some form of transcription for their built-in recording apps. But which one wins an iPhone vs. Android transcription face-off? That's what I hope to find out by pitting Apple's Voice Memo app against Google 's Recorder app in a series of tests.

Google is an old hand at transcription, having added it to the Recorder app five years ago. In fact, Google's Pixel phones were the first flagships to offer that capability as a built-in feature, though in the ensuing years, it's rolled out to more Android phones. The iPhone is a relative newcomer in this area, having only added transcription to Voice Memos with this year's iOS 18 update.

But Apple is making up for lost time, also giving its phone app the ability to record and transcribe phone calls with the iOS 18.1 update. Google still enjoys an edge over Apple, as its transcripts appear in real time, as you're recording lectures, meetings and whatnot.

With the iPhone, you have to wait until a recording is done and then choose to get a transcript from a menu of options. But that's not what I'm interested in testing here. Rather, I want to see whose transcription feature is more accurate, as that will save you considerable time when reviewing recordings to refresh your memory on what exactly was said.

For this face-off, I used an iPhone 12 running iOS 18.2 and a Pixel 8a running Android 15 . Neither phone is the latest and greatest hardware from either phone maker, but this is a face-off that depends entirely on the strength of the software running on those phones.

I'd wager you'll see similar results on newer iPhones and Pixels. I tested the accuracy of Voice Memos and Recorder transcriptions in three different scenarios designed to mirror situations in which people might actually use those apps. Here's what my Voice Memo vs.

Google Recorder transcription face-off revealed. Face-off 1: Single speaker A lot of recordings you make with either Voice Memos on the iPhone or Recorder on the Pixel will feature just one speaker — probably just yourself if you're in the habit or recording reminders or verbal notes when a sudden brainstorm hits. There's also the prospect of recording lectures where an accurate transcript will be vital for reviewing later when you're studying for tests.

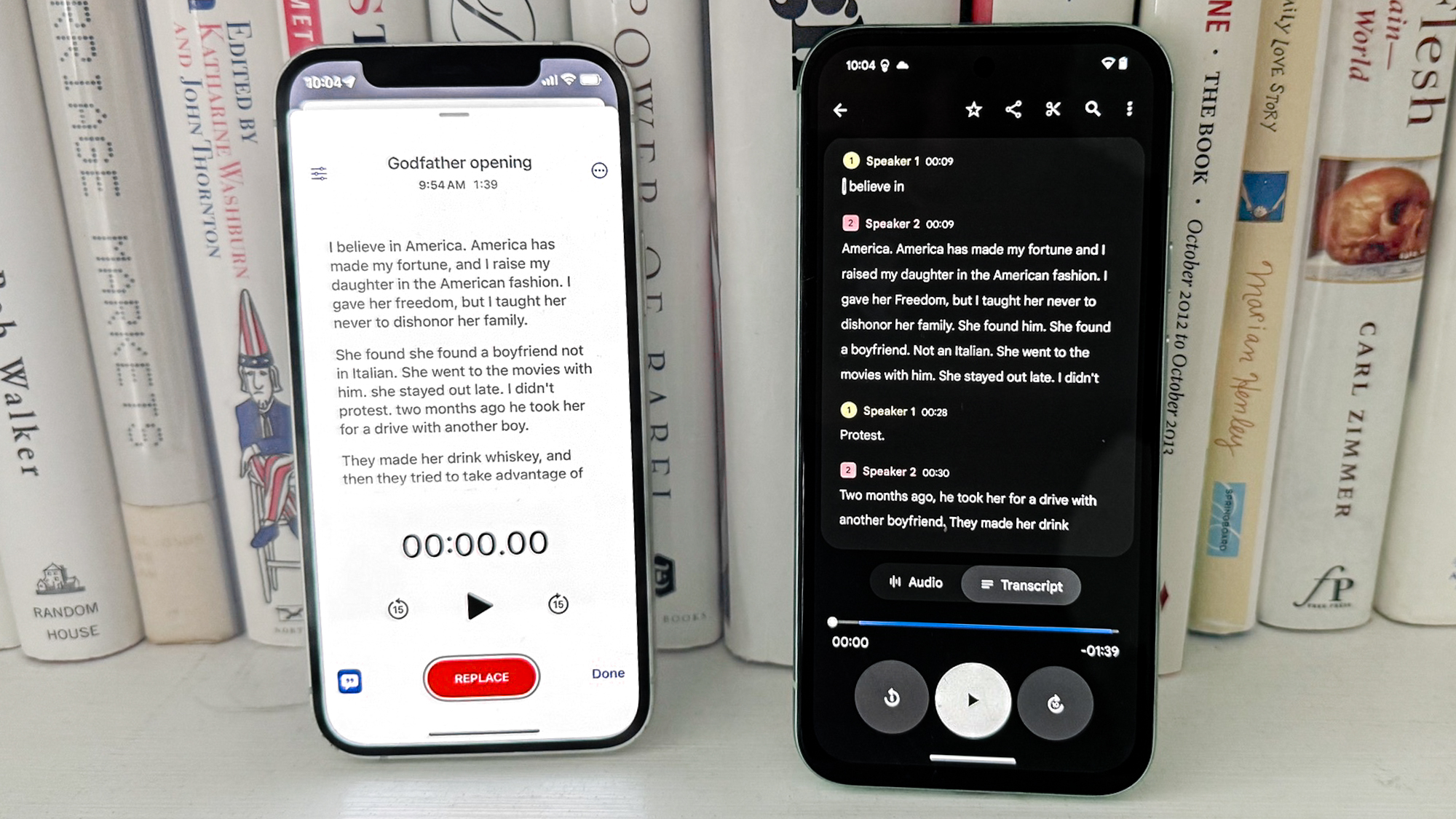

Likely, no one will ever be tested on me reciting the opening monologue from "The Godfather," but it's a speech I'm familiar enough with to see at a glance if Voice Memos and Recorder are up to the task of accurately transcribing it. I placed both phones 2 feet away across a table, tapped record and began reciting poor Bonasera's plea for Don Corleone's intervention. Neither transcript was perfect, but Voice Memos had fewer errors — a couple cut-off words here and there and an instance where Voice Memos heard "in" instead of "an.

" The transcript was sporadically punctuated with a few run-on sentences — that's common with a lot of transcription features — but overall, it reflected what I said. You wouldn't have to spend too much time comparing the written transcript to the audio recording to verify its accuracy, though if you were to reprint the transcript, you'd have to clean up the punctuation. Recorder on the Pixel 8a introduced a few more errors, mostly in the form of mishead words.

("Bastards" became "baskets" and "I" became "Hi.") Unlike the Voice Memo's cutoff words, these misheard words did muddle the meaning of the transcript, and would require you to listen back to the recording to figure out what was being said. The bigger problem with the Pixel 8a's effort, though, was that it kept assigning different speakers to different parts of the transcript — three speakers in total, even if the only person speaking was me.

From what I can tell, those extra speakers appeared any time I paused for dramatic effect or changed the tenor of my speech to reflect the situation Bonasera was describing. Whatever the reason, it made the Recorder transcript much more difficult to read. Winner: iPhone Voice Memos Face-off 2: Multiple speakers If you record a lot of meetings where there are multiple speakers and a free-flowing exchange of ideas, you'll want a recording app that recognizes when different people are speaking.

It helps you see at a glance who said what. And if you're tasked with producing transcripts of a multi-person discussion, having things already split out into different speakers gives you a head start over dealing with a big block of text. On paper, then, Google's Pixel phones are at an advantage — the Recorder app will recognize when multiple people are speaking.

(Sometimes, as we saw in our first test, it will invent phantom speakers, which is less helpful.) That's not an option in the Voice Memos toolkit just yet, so if you're using an iPhone, be prepared to insert your own speaker breaks. For an initial multi-speaker test, I tried Abbott and Costello's Who's on First routine, as the rapid back-and-forth and interruptions put the ability to recognize different speakers to test and comes close to approximating the free-for-all that can happen in some meetings where people talk over each other.

My wife and I read the different parts sitting next to each other, with the phones placed in front of both of us. As expected, the iPhone transcript proved tough to follow, not only because it didn't split up the exchanges into different speakers, but in places where run-on sentences and poor punctuation added to the confusion on when one person had stopped speaking and another person butted in. The iPhone also has a tendency to drop words when the transcription feature can't hear things clearly, turning exchanges over whether who or what is playing second base into impossible-to-decode gibberish.

In one passage, "St. Louis has a good outfield" was transcribed simply as "Louis has a good," with "outfield" dropped along with another speaker's response. ("Oh absolutely.

") That's a lot of words for Voice Memos to ignore. Then again, the Pixel 8a didn't fare much better, even with the built-in advantage of speaker labels. First, the app insisted that three people were speaking instead of two, but Google's transcription feature also has a problem where the first few words that one person says get appended to the end of the previous speaker's sentence.

That can make it confusing to figure out who's saying what just by referring to the auto-generated transcript. Throw in misheard words "picture" rather than "pitcher," and Google's transcript isn't that much better than what you get from the iPhone. To see if things improved with a less frenetic exchange, I tried again, this time using some familiar dialogue from "Casablanca.

" The iPhone's lack of speaker identification still proved to be a significant hurdle, though the transcript was more accurate than before, if you forgive an instance where "Wisbon" appeared instead of "Lisbon." (And right after Lisbon had been accurately transcribed from another speaker, too!) The Pixel's problem of running one speaker's words into another continued here, even with fewer interruptions and more significant pauses between the two speakers. And once again, a phantom speaker joined what should have been a two-person exchange.

Winner: Twist my arm, and it's probably the Google Recorder app. But it needs to do a better job distinguishing speakers. Face-off 3: Recognizing accents All of us speak differently, with regional dialects, different pronunciations and assorted accents making the same words vary from one speaker to the next.

And while most of us are able to adjust when we're doing the listening, transcription features aren't always as adept when accounting for speakers from different parts of the world. Part of my job involves interviewing lots of different people from lots of different areas, all of whom have their own unique speech patterns. When I use a voice recorder to augment my handwritten notes, I often find that the auto-generated transcripts can show a bias against different types of accents.

I wanted to see if the transcription features for the iPhone's Voice Memos and Google's Recorder account for that potential bias. To test accuracy, I recorded a section of the Google I/O 2024 keynote off my computer in which Sundar Pichai talked about the company's efforts with AI. I picked a passage with a lot of technical terms to see how well the transcription features could recognize phrases that don't appear a lot in everyday conversation.

Voice Memos on the iPhone struggled with this transcript, mishearing a lot of words — "gymnas" instead of "Gemini era is" and "multiodeleded" instead of "multimodal" — and really making it hard to figure out what was said just by looking at the transcript. I had to go back and play and the audio while looking at the transcript to truly understand what was being said. While that's not the worst outcome in the world, it does defeat the purpose of glancing at a transcript for a quick reference.

Perhaps the Recorder app benefitted from a home-field advantage by transcribing a Google keynote, but it made fewer errors and seemed to recognize technical terms that befuddled Voice Memos. Recorder's biggest errors were mishearing phrases like "Google I/O" — transcribed as "Google iron." It was a more accurate transcript, though haphazard punctuation did complicate how easy it was to follow along with what had been said.

Winner: Pixel Recorder iPhone Voice Memos vs. Pixel Recorder: Verdict Neither the Voice Memos app nor the Pixel Recorder stood out as a clear-cut winner when it came to transcription features. But if I had to choose one over the other, I'd be more likely to depend on the Recorder app on the Pixel, as its strengths fit in the areas that are more important to me.

The iPhone made fewer errors in the single and multi-speaker tests, though its tendency to drop words altogether can lead to a confusing transcript. Google squanders its edge with speaker labels by applying them haphazardly, and its random punctuation makes transcripts difficult to scan. I do think Google does a better job accounting for accents and dialects, likely do to its five-year head start on developing transcription.

Both platforms need to do a lot of work still when it comes to transcription — which is understandable in the iPhone's case since the feature is just a few months old. I'd like to see Apple add a speaker labels feature and do a better job of having its model recognize different pronunciations. I think Google really needs to do some work on its speaker labels, too, especially when it comes to recognizing when one person stops speaking and another person starts.

Transcription tools can be a real time-saver, especially if you rely on audio recordings as a study tool or work aid. But there's a lot of room for those features to improve on both Android and the iPhone. More from Tom's Guide.