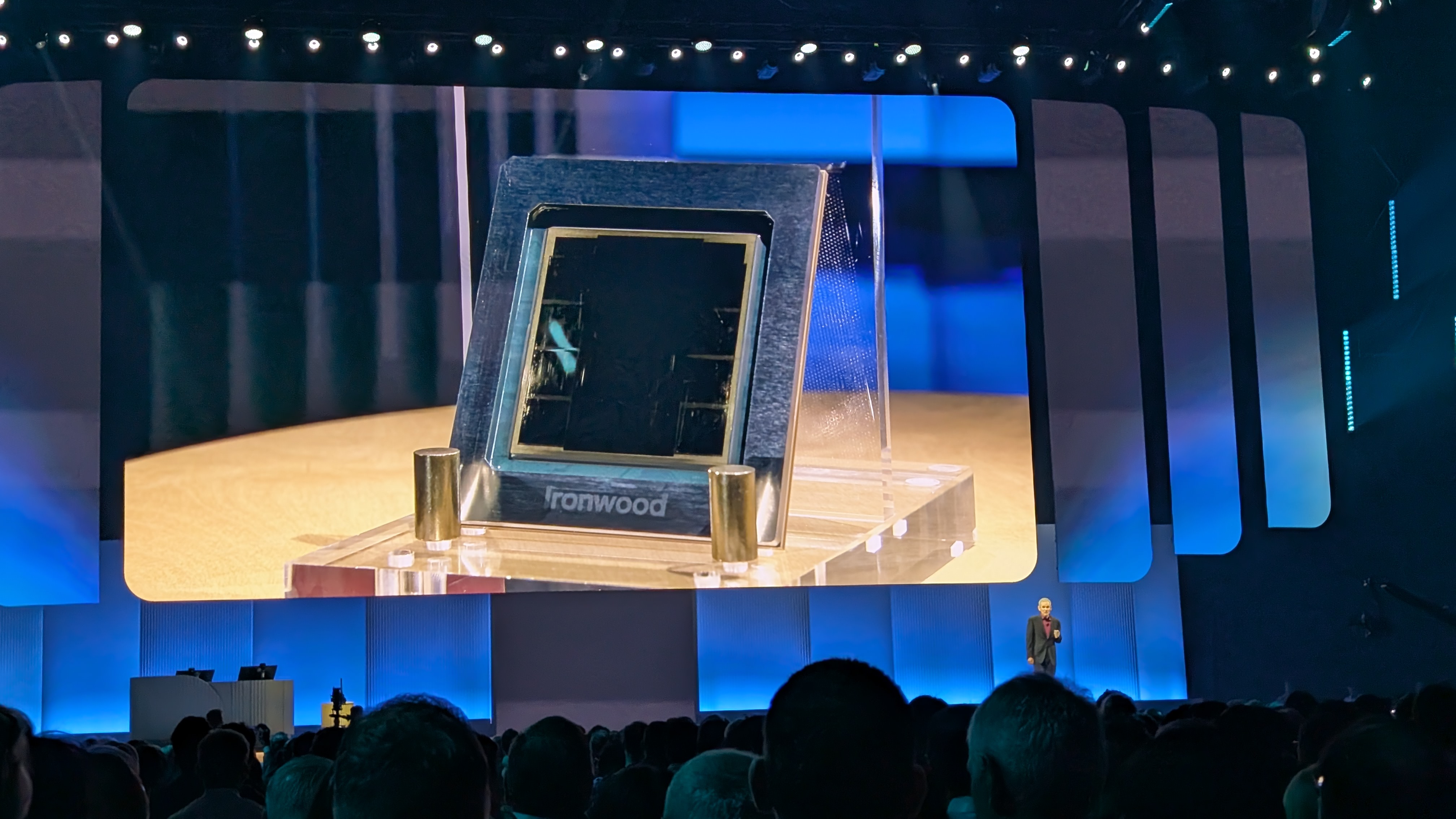

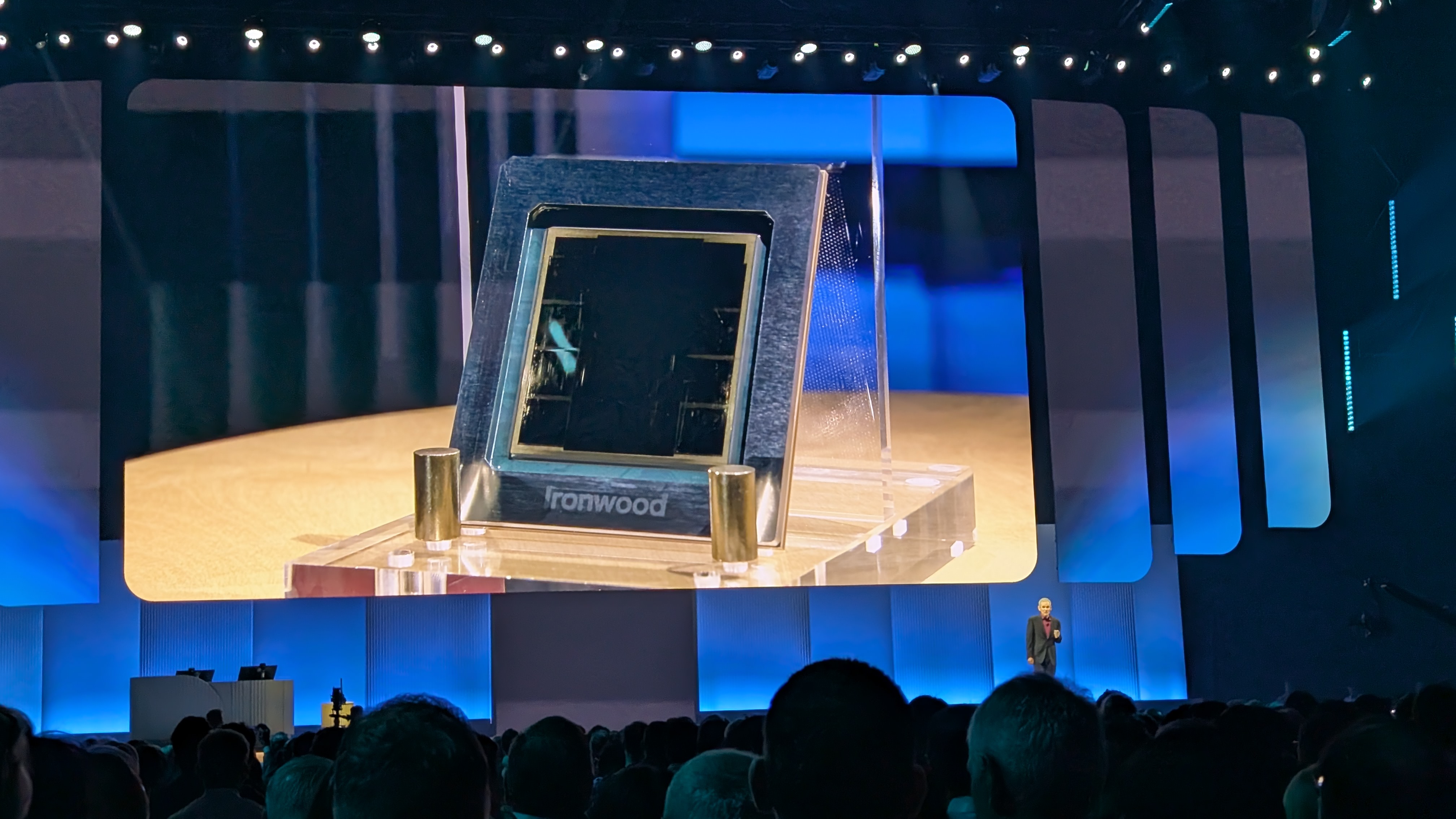

Google unveils Ironwood, its 7th-generation TPU Ironwood is designed for inference, the new big challenge for AI It offers huge advances in power and efficiency, and even outperforms El Capitain supercomputer Google has revealed its most powerful AI training hardware to date as it looks to take another major step forward in inference. Ironwood is the company's 7th-generation Tensor Processing Unit (TPU) - the hardware powering both Google Cloud and its customers AI training and workload handling. The hardware was revealed at the company's Google Cloud Next 25 event in Las Vegas, where it was keen to highlight the great strides forward in efficiency which should also mean workloads can run more cost-effectively.

DeepSeek on steroids: Cerebras embraces controversial Chinese ChatGPT rival and promises 57x faster inference speeds Asus debuts its own mini AI supercomputer: Ascent GX10 costs $2999 and comes with Nvidia's GB10 Grace Blackwell Superchip Google Ironwood TPU The company says Ironwood marks "a significant shift" in the development of AI, making part of the move from responsive AI models which simply present real-time information for the users to process, towards proactive models which can interpret and infer by themselves. This is essentially the next generation of AI computing, Google Cloud believes, allowing its most demanding customers to set up and establish ever greater workloads. At its top-end Ironwood can scale up to 9,216 chips per pod, for a total of 42.

5 exaflops - more than 24x the compute power of El Capitan, the world's current largest supercomputer . Each individual chip offers peak compute of 4,614 TFLOPs, what the company says is a huge leap forward in capacity and capability - even at its slightly less grand configuration of "only" 256 chips. Are you a pro? Subscribe to our newsletter Sign up to the TechRadar Pro newsletter to get all the top news, opinion, features and guidance your business needs to succeed! However the scale can get even greater, as Ironwood allows developers to utilize the company's DeepMind-designed Pathways software stack to harness the combined computing power of tens of thousands of Ironwood TPUs.

Ironwood also offers a major increase in high bandwidth memory capacity (192GB per chip, up to 6x greater than the previous Trillium sixth-generation TPU) and bandwidth - able to reach 7.2TBps, 4.5x greater than Trillium.

"For more than a decade, TPUs have powered Google’s most demanding AI training and serving workloads, and have enabled our Cloud customers to do the same," noted Amin Vahdat, VP/GM, ML, Systems & Cloud AI. "Ironwood is our most powerful, capable and energy efficient TPU yet. And it's purpose-built to power thinking, inferential AI models at scale.

" New Blackwell Ultra GPU series is the most powerful Nvidia AI hardware yet We've rounded up the best workstations around for your biggest tasks On the move? These are the best mobile workstations on offer.

Technology

Google Cloud unveils Ironwood, its 7th Gen TPU to help boost AI performance and inference

7th-Gen Ironwood TPU marks Google Cloud's next step forward in AI inference capabilities.